What is ChainOpera AI?

ChainOpera® AI (https://ChainOpera.ai) is the next-gen cloud service for LLMs & Generative AI. It helps developers to launch complex model training, deployment, and federated learning anywhere on decentralized GPUs, multi-clouds, edge servers, and smartphones, easily, economically, and securely.

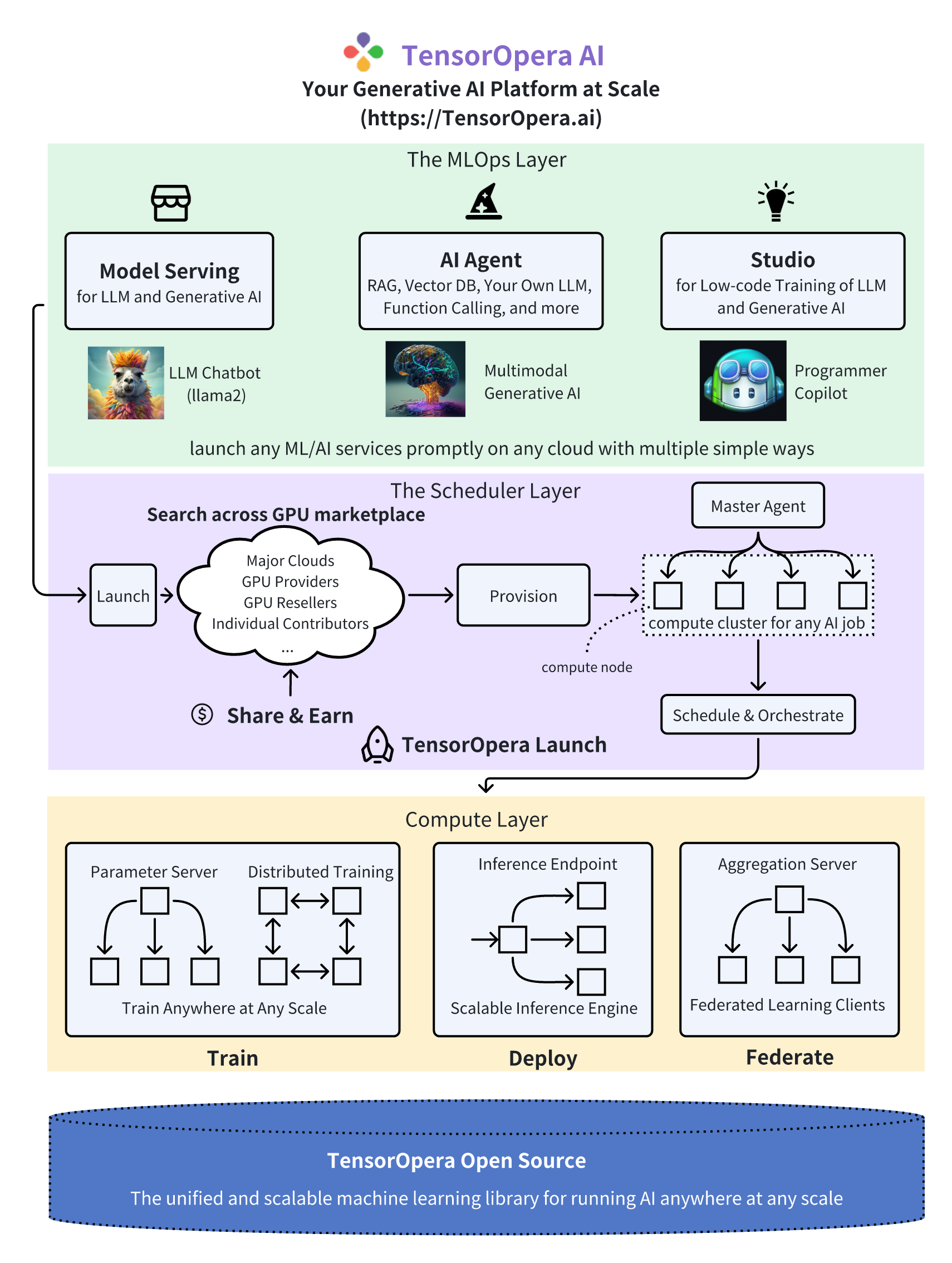

Highly integrated with ChainOpera open source library, ChainOpera AI provides holistic support of three interconnected AI infrastructure layers: user-friendly MLOps, a well-managed scheduler, and high-performance ML libraries for running any AI jobs across GPU Clouds.

A typical workflow is showing in figure above. When developer wants to run a pre-built job in Studio or Job Store, ChainOpera®Launch swiftly pairs AI jobs with the most economical GPU resources, auto-provisions, and effortlessly runs the job, eliminating complex environment setup and management. When running the job, ChainOpera®Launch orchestrates the compute plane in different cluster topologies and configuration so that any complex AI jobs are enabled, regardless model training, deployment, or even federated learning. ChainOpera®Open Source is unified and scalable machine learning library for running these AI jobs anywhere at any scale.

In the MLOps layer of ChainOpera AI

- ChainOpera® Studio embraces the power of Generative AI! Access popular open-source foundational models (e.g., LLMs), fine-tune them seamlessly with your specific data, and deploy them scalably and cost-effectively using the ChainOpera® Launch on GPU marketplace.

- ChainOpera® Job Store maintains a list of pre-built jobs for training, deployment, and federated learning. Developers are encouraged to run directly with customize datasets or models on cheaper GPUs.

In the scheduler layer of ChainOpera AI

- ChainOpera® Launch swiftly pairs AI jobs with the most economical GPU resources, auto-provisions, and effortlessly runs the job, eliminating complex environment setup and management. It supports a range of compute-intensive jobs for generative AI and LLMs, such as large-scale training, serverless deployments, and vector DB searches. ChainOpera® Launch also facilitates on-prem cluster management and deployment on private or hybrid clouds.

In the Compute layer of ChainOpera AI

- ChainOpera® Deploy is a model serving platform for high scalability and low latency.

- ChainOpera® Train focuses on distributed training of large and foundational models.

- ChainOpera® Federate is a federated learning platform backed by the most popular federated learning open-source library and the world’s first FLOps (federated learning Ops), offering on-device training on smartphones and cross-cloud GPU servers.

- ChainOpera® Open Source is unified and scalable machine learning library for running these AI jobs anywhere at any scale.